AMD Launches New Instinct MI350 AI Accelerator

According to foreign media reports, AMD will release the new Instinct MI350 series of AI gas pedals this Thursday, a product that marks another important advancement in the company's AI hardware. Based on TSMC's 3nm process and AMD's latest CDNA 4 architecture, the new series can deliver superior AI computing performance, competing directly with NVIDIA's Blackwell series.The MI350 series not only achieves breakthroughs in hardware specifications, but also improves the compatibility and efficiency of AI applications through the optimized ROCm software ecosystem, providing powerful support for data centers and hyperscale AI computing.

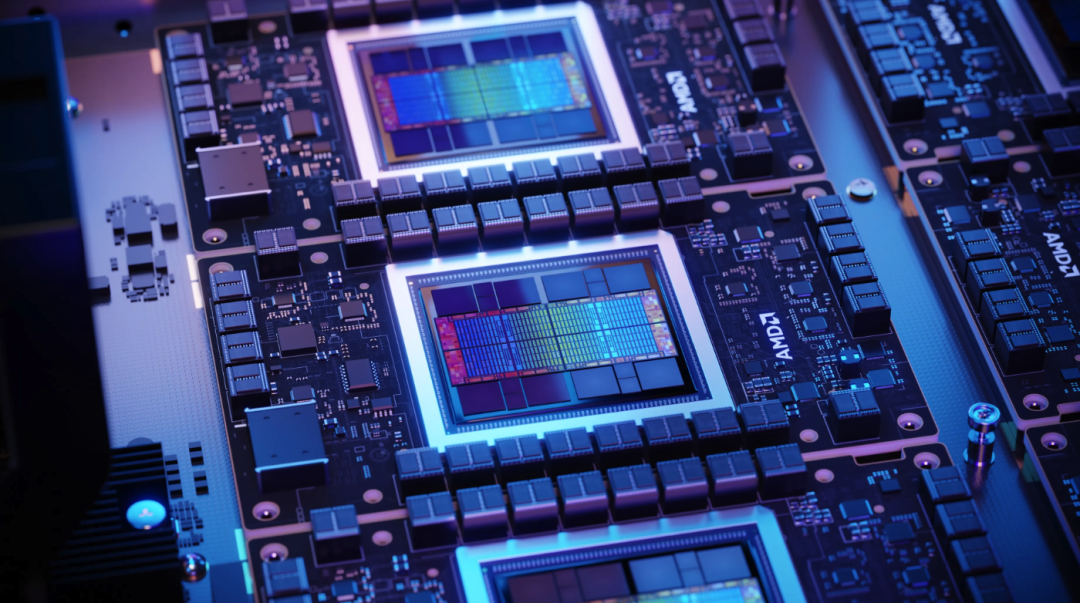

The core highlight of the MI350 series is its high-performance hardware configuration. A single card is equipped with up to 288GB of HBM3E video memory and a memory bandwidth of 8TB/s, which is a 12.5% and 33.3% increase compared to its predecessor, the MI325X, which has 256GB of video memory and 6TB/s of bandwidth. In terms of computational performance, the MI350 series supports multiple floating-point precision formats such as FP16, FP8, FP6, and FP4, with FP16 performance reaching 18.5 PFlops, FP8 37 PFlops, and FP6/FP4 as high as 74 PFlops. compared to the MI300X, the FP16 performance of the MI350 series has increased by approximately 7.4 times. The model parameter processing capacity surged from 714 billion to 4.2 trillion, an increase of nearly 6 times. This specification enables the MI350 series to effectively address the training and reasoning needs of large language models and hybrid expert models with trillions of parameters. The CDNA 4 architecture is the key point of the performance leap of the MI350 series. Compared to the CDNA 3-based MI325X, CDNA 4 introduces support for FP4 and FP6 low-precision data types, dramatically reducing computational complexity and making it particularly suitable for large model quantization and inference tasks. In addition, the application of the 3nm process further improves transistor density and energy efficiency, and the power consumption of a single card is expected to be over 1000W, basically the same level as NVIDIA B200's 1000W and GB200's 1700W. The MI350 series also adopts an advanced packaging technology that supports a single-platform, eight-card configuration, with a total memory capacity of up to 2.3TB and a total bandwidth of up to 64TB/s The MI350 series also supports a single-platform eight-card configuration with a total graphics memory capacity of up to 2.3TB and a total bandwidth of up to 64TB/s, providing sufficient computing resources for ultra-large AI workloads. At the software level, AMD continues to optimize its ROCm open software stack to provide strong support for the MI350 series. The latest version, ROCm 6.2, delivers a 2.4x improvement in inference performance over 6.0, a 1.8x improvement in training performance, and support for cutting-edge technologies such as FP8 data types, Flash Attention 3, and Kernel Fusion.AMD has collaborated with the open source community to integrate mainstream AI frameworks, such as PyTorch, Triton, ONNX, and others, into ROCm to Ensuring that the MI350 series can seamlessly run popular generative AI models such as Stable Diffusion 3, Llama 3.1, and millions of models on the Hugging Face platform. This software ecosystem advancement narrows the gap between AMD and NVIDIA CUDA ecosystems and provides developers with a more flexible development environment.

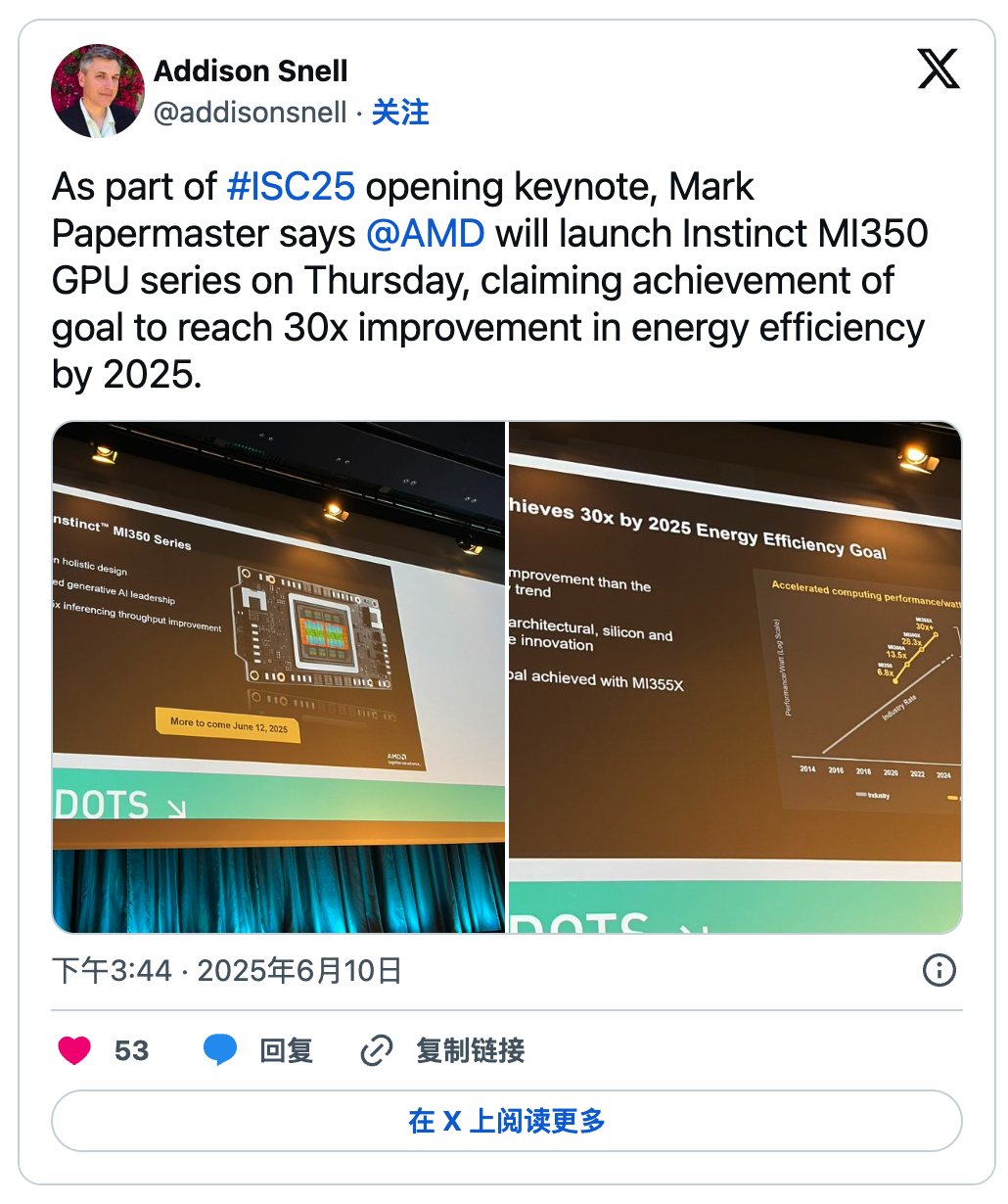

The release of the MI350 series is not only a reflection of hardware upgrades, but also reflects AMD's strategic layout in the AI market. Compared to NVIDIA's Blackwell B200 (192GB HBM3E, 8TB/s bandwidth), the MI350 series has a 50% lead in memory capacity, comparable bandwidth, and about 35 times higher inference performance, positioning it between Blackwell and Blackwell Ultra.AMD's Chief Technology Officer Mark Speaking at the ISC25 keynote, Papermaster said the MI350 series is expected to deliver a 30x improvement in energy efficiency by 2025 through architectural and packaging innovations. This goal is made possible by the low-power nature of the 3nm process and the optimization of the CDNA 4 architecture for low-precision computing, allowing the MI350 to demonstrate a higher performance-to-power ratio in high-performance computing (HPC) and AI training. The MI350 series is expected to be officially launched in the second half of 2025, with the first batch of products including the MI355X gas pedal, which will be integrated into server platforms by partners such as Dell, Lenovo, HP, etc. AMD is also planning to launch the MI400 series based on the CDNA 5 architecture in 2026, to further improve performance and efficiency. Currently, AMD's AI gas pedals are used in a variety of applications, and the release of the MI350 series will further consolidate AMD's competitiveness in the data center AI market.

However, AMD still faces challenges in the AI hardware space. supply constraints on HBM3E memory may affect the initial production capacity of MI350. compared with NVIDIA, AMD's lead time is 26 weeks, while NVIDIA's is more than 52 weeks, reflecting the market's strong demand for high-performance AI chips. In addition, the ROCm ecosystem, although rapidly developing, still needs to improve end-to-end functionality compared to CUDA. amd is accelerating the ecosystem construction by partnering with more than 100 AI application developers, and it's hard to say how effective it will be in practice yet. The release of the MI350 series is an important step for AMD in the AI hardware competition. the combination of the 3nm process, CDNA 4 architecture and 288GB of HBM3E video memory provides strong support for processing ultra-large-scale AI models, while the continuous optimization of the ROCm ecosystem provides developers with a flexible software environment. Compared to its predecessor, the MI350 series achieves leapfrog improvements in performance, efficiency and model processing capabilities, and the competition with the NVIDIA Blackwell series will drive technological advances in the AI hardware market. In the future, AMD's annual product roadmap and continued architectural innovation will further solidify its position in the AI computing space, bringing more high-performance, low-cost solutions to the industry.

Related News

- Intel's All-Big-Core Processors Are On the Way

- AMD Unveils Future Plans: Introducing Zen7 Architecture and MI500 Series

- AMD Set to Evenly Compete with Intel in the Server CPU Market

- NVIDIA Plans to Launch Next-Generation Rubin Accelerators in September

- AMD Unveils New Zen5 Processor

- TSMC Completes Trial Production of 2nm Process with an Impressive 90% Yield

- Intel Confirms Nova Lake Processors, Adopting 14A Process Technology

- GIGABYTE Launches AI TOP ATOM Desktop Supercomputer Delivering 1000 TOPS Performance

- Intel Official Website Unveils Arc B750 Graphics Card

- AMD's Next-Gen Zen 6 Architecture APU Core Configuration Exposed