NVIDIA Expands Data Center Portfolio with GB200 NVL4 and H200 NVL PCIe GPU

NVIDIA has revealed the expansion of its data center product line at the SC24 supercomputing conference, introducing the GB200 NVL4 and H200 NVL PCIe GPUs.

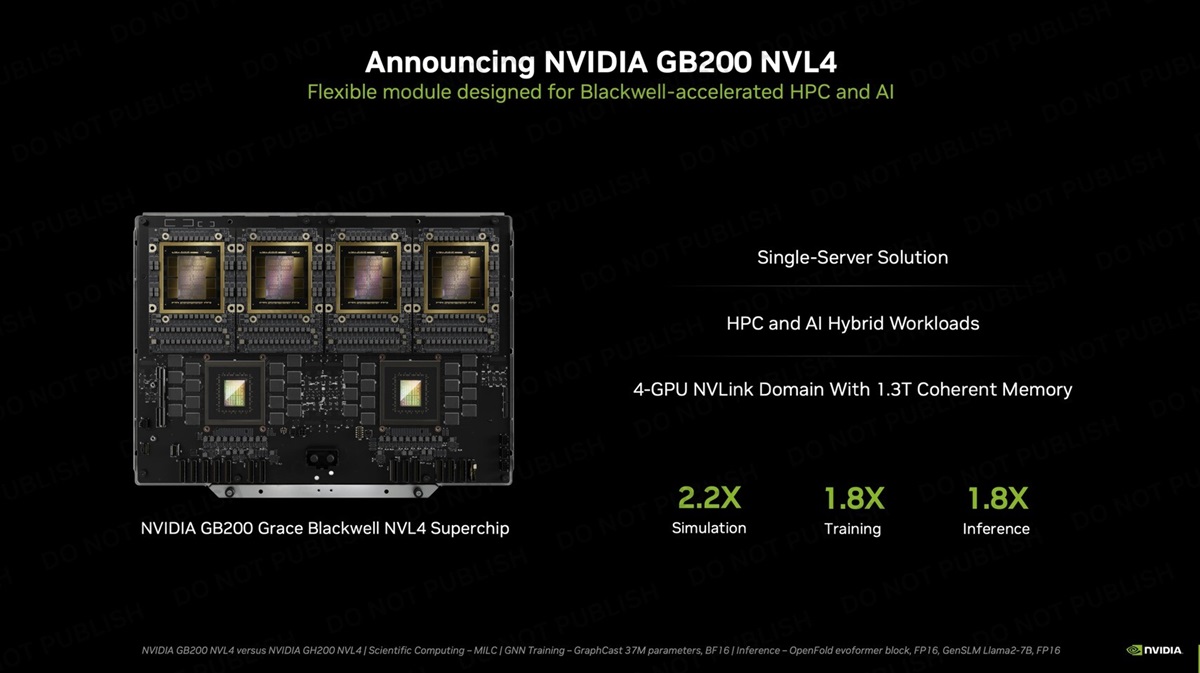

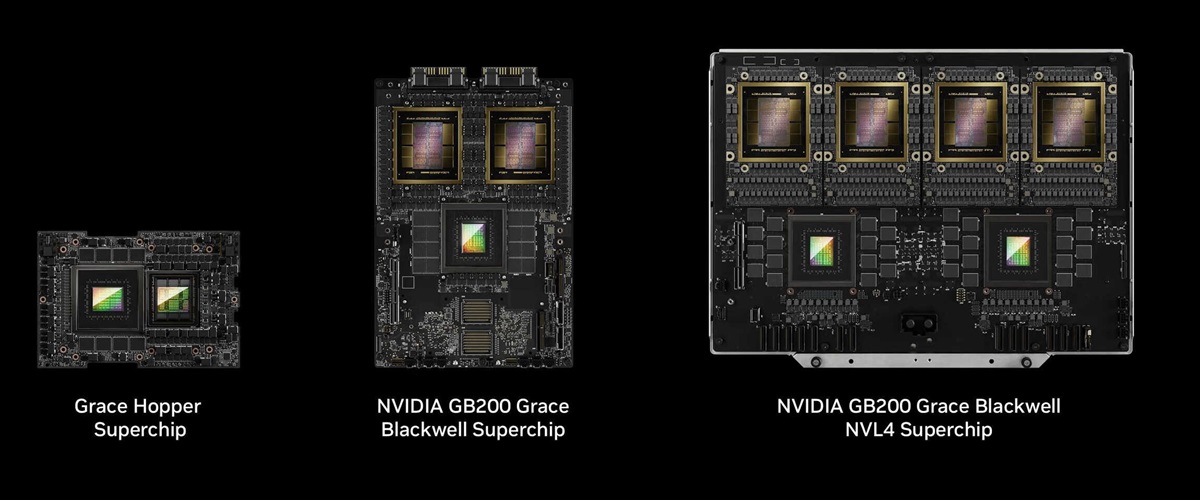

The GB200 NVL4 represents a sophisticated single-server solution, enhancing the Blackwell architecture lineup. It seamlessly integrates four Blackwell GPUs and two Grace CPUs on a single motherboard, employing NVLink interconnect technology for efficient communication. With 768GB of HBM3E and 960GB of LPDDR5X memory, it delivers an impressive combined memory bandwidth of 32TB/s. This configuration establishes a formidable system capable of managing demanding AI workloads with exceptional efficiency.

According to NVIDIA, the GB200 NVL4 provides 2.2 times the simulation performance, 1.8 times the AI training performance, and 1.8 times the AI inference performance compared to the GH200 NVL4. The system is energy-intensive, consuming 5,400W, and incorporates a state-of-the-art liquid cooling system to maintain optimal performance levels. It is anticipated to be deployed in the server racks of large-scale computing customers.

NVIDIA's H200 NVL PCIe GPU is the latest addition to the Hopper family. It is specifically designed for enterprises operating data centers, seamlessly adapting to low-power, air-cooled rack designs. This flexibility ensures efficient acceleration for a wide range of AI and HPC applications. A recent survey indicates that approximately 70% of enterprise racks function at or below 20kW and rely on air cooling, underscoring the importance of PCIe GPUs.

The H200 NVL PCIe GPU occupies two slots and has a TDP of 600W, which is less than the H200 SXM's 700W. While this results in a 15.6% reduction in INT8 Tensor Core operations, the GPU maintains support for 900GB/s NVLink interconnections in dual or quad configurations. Compared to the H100 NVL, the new model features 1.5 times the memory, 1.2 times the bandwidth, and offers 1.7 times the AI inference performance, along with a 30% increase in HPC application efficiency.

ข่าวที่เกี่ยวข้อง

- GIGABYTE Launches AI TOP ATOM Desktop Supercomputer Delivering 1000 TOPS Performance

- Comparison of NVIDIA RTX Pro 6000 and RTX 5090

- AMD to Launch Upcoming Radeon PRO Graphics Card with 32GB Memory

- Intel Isn't Giving Up on Desktop GPUs as New High-End Card Quietly Appears on Shipping List

- NVIDIA Faces Setback: GB300 Sales Fall Short of Expectations

- Asus Unveils the Ascent GX10: A Desktop Supercomputer with NVIDIA GB10

- Rumored: Oracle Acquires and Deploys Thousands of AMD MI355 X AI Accelerator Chips

- Jaguar Shores: Intel's Next-Generation AI Chip to Succeed Falcon Shores

- NVIDIA Blackwell GPU's First Benchmark Results: Up to 2.2x Performance Boost

- Shockwaves in the Chip Industry! Reports Claim Apple, Samsung, and Qualcomm Compete to Acquire Intel